I am interested in issues of privacy, bias, explainability, and reliability in machine learning, in particular as they pertain to generative models. A full list of my papers can be found on my Google Scholar.

Preprints / Surveys

Privacy Risks in Large Language Models: A Survey

Publications

Machine Unlearning Doesn’t Do What You Think: Lessons for Generative AI Policy, Research, and Practice [NeurIPS ’25 Position Paper]

Efficient Verification of Data Attribution, [NeurIPS ’25]

Attribute-to-Delete: Machine Unlearning via Datamodel Matching, new paper using techniques from data attribution for unlearning! [ICLR ’25]

Firm Foundations for Membership Inference in Language Models [DIG-BUG Workshop@ICML ’25]

Machine Unlearning Fails to Remove Data Poisoning Attacks [ICLR ’25]

Private Regression in Multiple Outcomes (PRIMO) [TMLR ’25]

Better Counterfactual Model Reasoning with Submodular Quadratic Component Models [NeurIPS ’24 ATTRIB Workshop]

In-Context Unlearning: Language Models are Few Shot Unlearners [ICML ‘24]

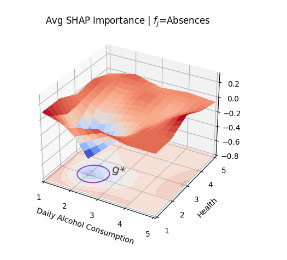

Feature Importance Disparities for Dataset Bias Investigations [ICML ‘24] Code

MusCAT: A multi-scale, hybrid federated system for privacy-preserving epidemic surveillance and risk prediction [2nd Place Grand Prize Winner, 1st Place Phase 1 of the US/UK Privacy Challenge, ’23] White House Announcement

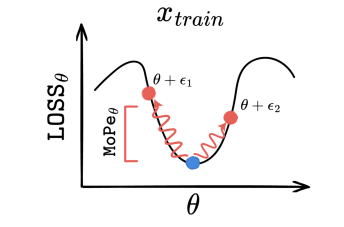

MoPe: Perturbation-based Privacy Attacks Against Language Models [EMNLP ’23, NEURIPS SoLaR Workshop ’23]

On the Privacy Risks of Algorithmic Recourse Code [AI STATS ’23]

Adaptive Machine Unlearning [NEURIPS ’21]

Data Privacy in Practice at LinkedIn [Harvard Business School Case Study ’22]

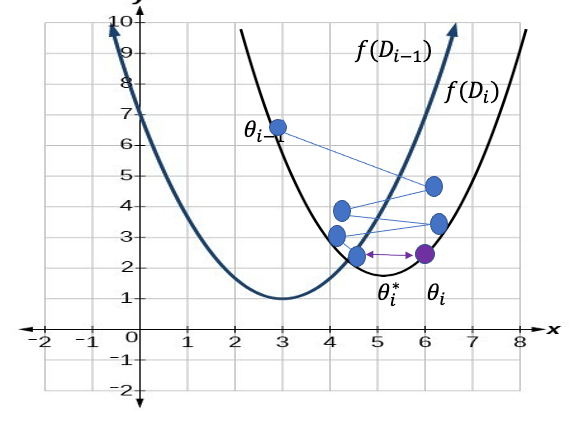

Descent-to-Delete: Gradient-Based Methods for Machine Unlearning [Algorithmic Learning Theory ’21] Code

Eliciting and Enforcing Subjective Individual Fairness [FORC ’21]

Optimal, Truthful, and Private Securities Lending [ACM AI in Finance ’20, NEURIPS Workshop on Robust AI in Financial Services ’19] selected for oral presentation!

Differentially Private Objective Perturbation: Beyond Smoothness and Convexity [ICML ’20, NEURIPS Workshop on Privacy in ML ’19]

A New Analysis of Differential Privacy’s Generalization Guarantees [ITCS ’20] regular talk slot!

The Role of Interactivity in Local Differential Privacy [FOCS ’19]

How to use Heuristics for Differential Privacy [FOCS ’19] Video.

An Empirical Study of Rich Subgroup Fairness for Machine Learning [ACM FAT* ’19, ML track]

- Led development on package integrated into the IBM AI Fairness 360 package here. AIF360 development branch on my Github, with a stand-alone package developed by the AlgoWatch Team.

Fair Algorithms for Learning in Allocation Problems [ACM FAT* ’19, ML track]

Preventing Fairness Gerrymandering: Auditing and Learning for Subgroup Fairness [ICML ’18, EC MD4SG ’18]

Mitigating Bias in Adaptive Data Gathering via Differential Privacy [ICML ’18]

Accuracy First: Selecting a Differential Privacy Level for Accuracy Constrained ERM [NIPS ’17, Journal of Privacy and Confidentiality ’19]

A Framework for Meritocratic Fairness of Online Linear Models [AAAI/AIES ’18]

Rawlsian Fairness for Machine Learning [FATML ’16]

A Convex Framework for Fair Regression [FATML ’17]

Math stuff from College & High School

Aztec Castles and the dP3 Quiver [Journal of Physics A ’15]

Mahalanobis Matching and Equal Percent Bias Reduction[Senior Thesis, Harvard ’15]

Plane Partitions and Domino Tilings [Intel Science Talent Search Semifinalist, ’11]